I believe that people with disabilities often end up as unwilling accessibility testers. Any time a person with a disability interacts with the web, they may be unwittingly performing testing because so many websites are not fully accessible to everyone. These inaccessible web sites and applications present challenges to people with differing abilities. Why not hire and incorporate people with disabilities into formal accessibility testing? This article will review the challenges and possible solutions.

Overview of Accessibility Testing

Responsible organizations will develop and test their websites and digital assets for accessibility. They will include people with disabilities throughout the entire process. Some are motivated for the right reasons; they want to offer all people access to their services. Others may be responding to a lawsuit or are taking action to prevent a lawsuit. Some organizations will contract a third party to perform an accessibility audit. As a member of Knowbility’s technical team, I participate in many of these audits.

What is involved in performing an accessibility audit? Here is a brief overview of how Knowbility approaches the process.

Once the job is scoped and we know what representative pages need to be tested, the team will split up the work and begin testing. We test against the Web Content Accessibility Guidelines (WCAG) 2.0 or WCAG 2.1. We encourage WCAG 2.1 Level AA compliance. The WCAG 2.0 specification was released in 2008. At that time, mobile computing was not prevalent. WCAG 2.1 was released in June of 2018 and contains additional success criteria to better support mobile, low vision, and cognitive issues. The Knowbility team follows a standard process but each member of the team may have a slightly different approach. We perform three levels of testing during an audit:

- Using automated tools to catch things like duplicate ID, no page title, flag color contrast, etc.

- Testing the functional operation of the website using only a keyboard, a screen reader, and with low vision tools such as magnification, zoom, and high contrast settings.

- Reviewing the code via the browser web inspection tools.

In addition to finding and reporting errors, we provide additional guidance on how to find and fix the problem. We write a detailed description of each error and explain how the issue affects people with disabilities. The description identifies the violated WCAG success criteria. We generally include screenshots to highlight the exact problem. If appropriate, we add code snippets to identify the problem, provide information on how to address the issue, and even the recommended code to fix. We also provide links to our own and other resources that explain recommended solutions. To insure complete coverage there is a peer review of all issues.

Once the audit is complete, we provide a detailed report of all of the issues and their respective potential fixes to the client. After they have time to review, we schedule a follow-up meeting with the client to review the report and the findings. Other good auditing organizations will do the same.

My Process

When I start reviewing a page or website for accessibility I start with automated tools. The Web Accessibility Evaluation Tool (WAVE) from WebAIM is one of the tools I rely upon often. WAVE is a very visual testing tool that identifies many errors: images without alt text, forms without labels, improper heading structure, color contrast, use and misuse of ARIA, and much more. The errors are visually identified via icons inserted into the page. The errors can be filtered that only certain types are visible at one time.

I use WAVE to identify and highlight color contrast issues. Once identified, the Chrome Developer tools will show me the exact colors and contrast ratios and allow me to modify the values within the code. I use this information to make alternate color recommendations to the client. WAVE also identifies images that have no alt text. But, like other tools, WAVE cannot tell me if the alt text is valid. I have to manually check that the alt text describes the image or if the image has been properly or improperly marked as decorative. This requires inspecting the code.

While WAVE is very visual, my testing showed that it is keyboard accessible. I didn’t need any specific instructions and the popup and tabpanel implementations followed the WAI-ARIA Authoring Practices 1.1 for those components. A sighted keyboard user could certainly use this tool to assist with testing.

I also rely on other automated tools to review other aspects of the page. I generally use the Web Developer by Chris Pederick to view the document structure. I find the graphical depiction of the layout helpful to explain the problem.

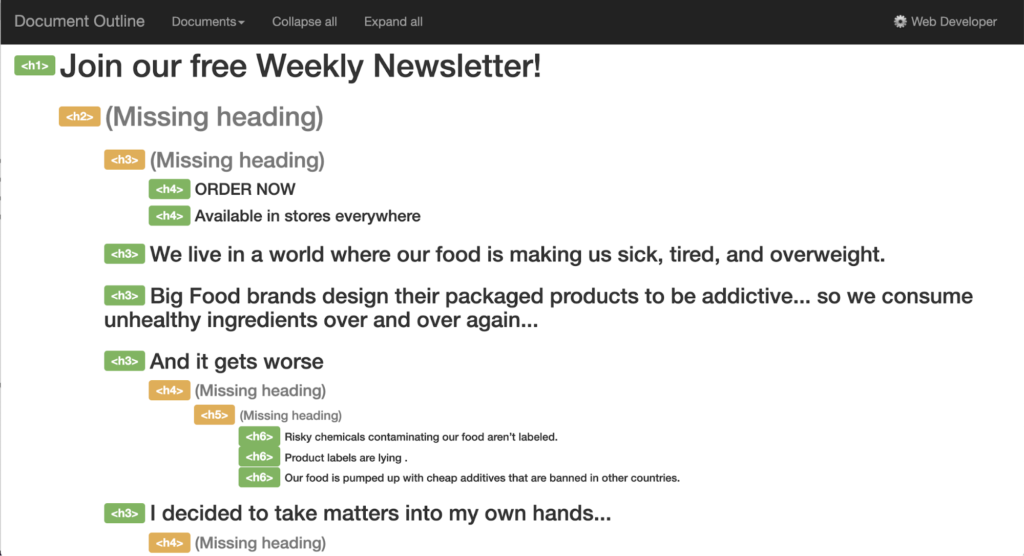

The screenshot below demonstrates the use of headings for styling rather than structure. The heading level 1, <h1>, contents, “Join our free Weekly Newsletter!,” does not describe the main topic of the page. There is no <h2> nor <h3> following the <h1>. The headings are not used to describe the topics within the page. There is no hierarchical organization.

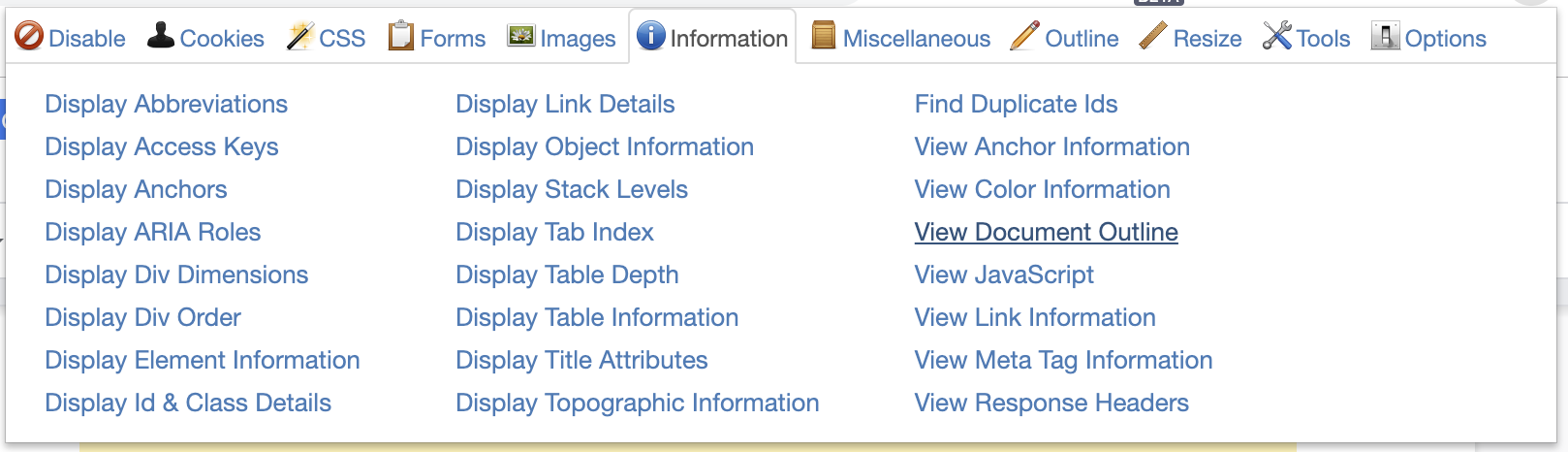

The Web Developer toolbar is not just meant for accessibility testing, it has many options to assist in testing and general development. The screen capture below shows the information panel. The View Document Outline option is underlined. This was used to generate the outline shown in the previous screenshot. This is just one of the many panels and options used to further inspect a page.

There are many different testing tools available. In addition to those already mentioned here are a few that I have installed and use. I have not tested the accessibility of these:

- JavaScript Bookmarklets for Accessibility Testing from Paul J Adams

- axe tool from Deque

- Microsoft Accessibility Insights for Web

- Landmarks Extension by Matthew Tylee Atkinson

- Dynamic Assessment Plugin from IBM

There are many additional tools and extensions that work just as well. Find the ones that work best for you.

After a first pass using automated tools I will attempt to interact with the website using only my keyboard. I will begin tabbing through the page to determine if:

- There is a visible indicator when an item receives focus.

- All actionable items receive focus and can be operated with only the keyboard. This includes, links, buttons, form elements, menus, tab panels, accordions, and many other interactive elements. Drop down or fly out menus often lack keyboard support and will work only with the mouse.

While using the keyboard, I am aware of other possible issues. While verifying that a link operates correctly with the keyboard, I will note if it opens in a new window unexpectedly. This would violate WCAG 2.1 success criterion 3.3.2 On Input because the context has changed ( a new window appeared) when the link was activated. Users should be made aware of links that will open in a new window. I will notice and record other problems as I test with the keyboard.

Once I’ve gone through the site with the keyboard, I will use a screen reader. Screen readers will read the page out loud to the user. I will test with VoiceOver on MacOS and JAWS and NVDA (Non Visual Desktop Access) on Windows 10. I will use a combination of these screen readers to test the site. When items do not work or are not announced by the screen reader, I often have to dive into the code to determine the exact problem. I use my programming background and knowledge of the Accessible Rich Internet Applications (ARIA) specification to find and document the problem and possible solutions.

Next I must also test for responsive design and that the site works properly with browser zoom and magnification. There are also problems that affect speech input users. Testing is a complicated process and requires review with various tools and assistive technologies. It takes significant time to review, find, and to document the issues and solutions. I have only provided a brief overview of some of my testing strategy. Each tester will have strengths and weaknesses using the tools and assistive technologies. That is the reason why it is important to have testers of all levels of experience and abilities and to include people with disabilities in the testing process.

Including Testers with Disabilities

Someone with a disability is likely to do a better job than me at finding the errors that directly affect them.

Blind Testers

You might argue that there are issues that a blind tester cannot identify. For example, a blind user can not verify the accuracy of alternative text. This requires manual testing and a visual check to determine if the alt text provides an adequate description. However, a blind screen reader user will be much faster at finding missing or bogus alt text. A screen reader user will hear the name of the image announced when they encounter an image without alt text while testing the page. They will also easily identify images with inappropriate alt text such as, “null,” “needs description,” or other placeholder text. As someone who relies on sight, I might miss some of these items during testing.

Screen reader users have told me that they rely on headings to navigate a page. Much like sighted users visually review headings to find information on a page, screen reader users will review a list of the headings on the page. They can then easily and quickly select and navigate to one of the headings on the page. A site with poorly organized or non-descriptive headings will be readily identified. The screen reader will also display a list of links or other interactive elements on the page. The screen reader quickly exposes the repeated links on as site such as “read more,” “click here”, or other repeated phrases.

While I am competent using a screen reader, I am confident that a native screen reader user is going to be many times more capable than I am at using their assistive technology! Through everyday use they encounter repeated errors and patterns. This may be improperly labeling of form elements, improper use of ARIA, non-accessible popups and dialogs. Thus, they will easily and quickly find and report these errors.

Low Vision Testers

There are many types and categories of low vision. People who use screen magnification are likely to catch errors that others may miss. Popups can cause problems at high screen magnification. Hovering the mouse over an element may trigger a popup with more content. If the screen is magnified to 400% or more, the user may have to scroll to view the contents of the popup. However, when they move the mouse off of the trigger, the popup will close. Thus, they can never read it. This is why WCAG 2.1 added success criterion 1.4.13 Content on Hover or Focus. It adds new requirements for how content like popups are closed. As a sighted mouse user who does not rely on screen magnification, I may miss these types of issues.

Low vision users may rely on Windows high contrast mode or other programs to make the screen easier to see. Windows high contrast mode removes background images and colors. The user sets the size and colors for text, hyperlinks, disabled text, selected text, button text, and backgrounds that are best suited to their vision. Many websites use CSS background images to identify various icons and features. Examples include text editor buttons for text styling such as bold, italics, etc. or social icons to identify links to a website’s social presence. These errors are quickly identified because they will appear blank to testers using high contrast mode.

People with color blindness or low vision may have difficulty distinguishing colors. When only color is used to identify items, testers with this condition may not be able to determine the difference. For example, I might not catch that the instructions say to press the green button to continue a process and the red button to stop. Someone that cannot distinguish those colors will immediately point out the ambiguity and failure of success criterion 1.4.1 Use of Color.

People with cognitive, aging, or learning disabilities

As someone who has reached middle-age and perhaps beyond, I am often frustrated with the user interfaces I encounter, especially on mobile devices. My memory is not always up to the task of remembering the user interface nuances I encounter within each web application I use. This observation is coming from someone technical – I was a coder for most of my career! Memory is only one aspect of the broad realm of cognitive disabilities. People may have other types of learning or comprehension issues. People who think differently or have less experience can certainly be great testers. In addition to conventional testing, they can quickly point out the lack of clear instructions, confusing error messages, or overly complex descriptions that others may miss.

I hope these examples point out that people of all abilities can contribute to accessibility testing. People with different abilities will have various strengths and weaknesses. Part of accessibility testing is finding and reporting all errors.

Incorporating People with Disabilities into Testing

Vision Aid is an organization in India whose mission is, “Serving the visually disadvantaged in under-served areas”. They are using the Deque tools to train blind individuals as testers. Once trained, these people can find jobs performing accessibility audits. Glenda Sims of Deque worked with Vision Aid to establish this program.

To overcome some of the issues that a blind accessibility expert may have testing a site, Glenda uses paired testing with her employees at Deque. A blind accessibility expert is paired with a sighted assistant. Glenda provides a spreadsheet highlighting which WCAG success criteria require sighted assistance. Note that the sighted assistant may have little or no accessibility knowledge. She classifies the effort needed by the sighted assistant as high, medium, or low. The sheet contains the Deque checkpoint number and the portion of the checkpoint that needs assistance. And finally, it includes the task to be performed by the sighted assistance.

For example, verifying Text or Audio Description (Prerecorded Video Only with No Dialogue) is a high effort task. The assistant has to validate that the text or audio description is appropriate for the video. This task is completed by factually describing the video images. A low effort task is to factually describe images to determine if the alt text is appropriate.

Paired testing benefits all of the people involved. The sighted assistant gains experience from the blind accessibility expert. They better understand the navigation issues faced by screen reader users. The sighted assistant can explain some of the more complex visual constructs to the blind accessibility expert. This practice helps them to understand how certain errors appear within the screen reading context — win-win for all. Similar team pairing can be used to support other disabilities.

Conclusion

I hope I have demonstrated that anyone with the necessary technical skills can perform accessibility testing. There is no excuse for not employing accessibility experts of all abilities. Even though testing may require a bit more time or effort for some, the result is a more thorough report. Fewer issues will be overlooked because the experience of your testers with disabilities add another level of inspection. Providing sensory assistance empowers people with disabilities to perform comprehensive accessibility testing. As a bonus, this practice enhances the knowledge of everyone on the team.

Does your organization employ accessibility experts of all abilities to perform accessibility review and testing? If not, you are missing the potential to

- Provide more employment opportunities for people with disabilities.

- Diversify your workforce – studies show that diverse groups are more innovative. [1]

- Enable better usability and accessibility of your digital assets.

- Promote digital inclusion and enhance the knowledge of your team.

Inspiration

This article was inspired by my participation in a panel at the Boston Accessibility Conference on November 1, 2019. The panel, Accessible Accessibility Testing, was moderated by Glenda Sims, Team A11y Lead at Deque Systems, Inc, and sponsored by Ram Raju of Vision Aid. In addition to Glenda and me, participants included:

- Cheryl Cummings, Founder/Executive Director at Our Space Our Place, Inc.

- Jeanne Spellman, Chair of the Silver Task Force as part of the W3C Web Accessibility Initiative Accessibility Guidelines (WCAG) working group.

- Rich Caloggero, Independent contractor with MIT Assistive Technology Laboratory and WGBH National Center for Accessible Media

I thank them for allowing me to share the information and expertise they provided to the panel and to this article.

1. How Diversity can drive innovation, Harvard Business Review https://hbr.org/2013/12/how-diversity-can-drive-innovation

The Case for Improving Work for People with Disabilities Goes Way Beyond Compliance https://hbr.org/2017/12/the-case-for-improving-work-for-people-with-disabilities-goes-way-beyond-compliance

Birkir Gunnarsson says:

Great article, Becky!

I particularly love how you describe the challenges of a low vision user. As a blind accessibility tester I am fascinated with understanding the challenges faced by users with other types of disabilities, and your description of inaccessible tooltips was a thing of beauty.

Not to waste too much time playing WCAG gynmnastics but links that open in a new window do not violat WCAG 3.2.2 oninput. Links and buttons are specifically excluded from this requirement because activating a link is different from changing its settings. To quote the “understanding” text

“…checking a checkbox, entering text into a text field, or changing the selected option in a list control changes its setting, but activating a link or a button does not.”

That being said, I absolutely agree with your opinion that this should be a level A or AA requirement, not a level AAA requirement (WCAG 3.2.5).

At my organization I’ve established this as a standard requirement.

Keep up the good work and thanks to Knowbility for constantly driving accessibility forward.

Becky Gibson says:

Thanks for the positive feedback. As far as 3.2.2 On input goes, it is a matter of interpretation. I do believe that a link that opens in the same window is standard, expected behavior. It is not covered by 3.2.2. However, when a link opens in a new window without warning, I believe that is a change of context. The behavior is not standard and the user can become confused. I guess that is why WCAG is a guideline and not a legal standard. 🙂

Doret says:

Improving web sites to match the accessibility guidelines is very useful, but if the sites use an old-style Captcha that doesn’t provide an audio alternative that can be really used by the blind, all those guidelines might become useless for us. So the first rule should be “Never use captcha!”. In the exceptional rare cases when captcha is really necessary, when is obvious that somebody will make a specially design robot to steal contact data *from that site*, then yes, use captcha, but one that provide an audio alternative. A general robot that can search any site for harvesting email addresses can be easily defeated by other security solutions and captcha is not necessary.

Becky Gibson says:

I understand your concerns about CAPTCHA. In fact, the W3C has published a note about the Inaccessibility of CAPTCHA and tried to offer some alternatives.

Bob Cowell says:

Thank you for this article.

The organization I work for has relatively recently begun designing with accessibility in mind. However, that means we have to also get other projects up to speed as well. I found things I’d like to add to my process and a couple new tools.

I appreciate you sharing.

Jonathan Douglas Holden says:

Thanks for pointing out “Web Developer” – lovely tool.