Over the past years, more and more frontend optimizers had offered a way to self-host or proxy third-party resources. Akamai offers a way to specify a specific behavior for self-crafted URLs, Cloudflare has its Edge Worker and Fasterize can rewrite URLs on a page to reference third-party resources via the primary domain.

If you know that your 3rd party services do not change frequently and that the delivery of the assets can be improved, then you should consider proxying these services. This way, you can potentially improve the edge location of these assets, and have greater control of their client-side caching. This also ensures that if your third-party provider goes down or has performance degradation, your users won’t be affected.

The good: Performance Improvements

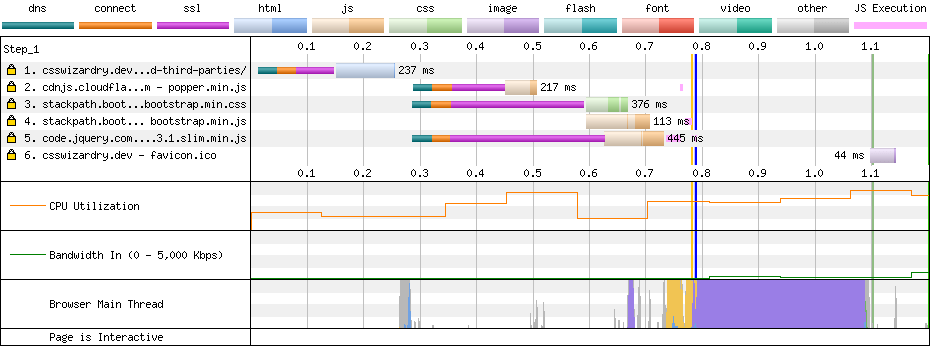

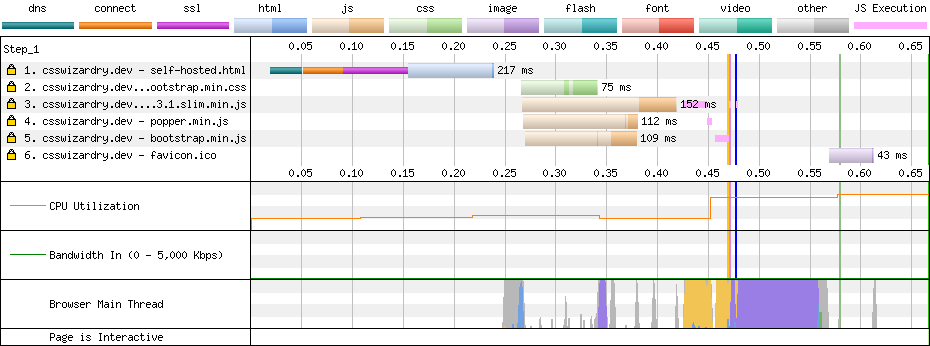

The impact in terms of performance is straightforward, the browser will save a DNS resolution, TCP connection and TLS handshake on a third-party domain. You can visually see the difference in the two waterfalls below:

Credit: https://csswizardry.com/2019/05/self-host-your-static-assets/

Credit: https://csswizardry.com/2019/05/self-host-your-static-assets/

Moreover, the browser will leverage the HTTP/2 multiplexing and prioritization established on the main domain.

Without self hosting, as third-party resources are served from a different domain than the main page, they cannot be prioritized and end up competing with each other for download bandwidth. This can cause the actual fetch times of other critical primary resources to be much longer than the best case.

Patrick Meenan explains it very well in his talk on HTTP/2 Priorization.

We could assume that trying to preconnect each critical third-party domains could do the trick but preconnecting too many domains may actually overload the bandwidth in a critical moment.

By self-hosting third-parties, you’ll control how these third-parties are served so:

- you could ensure that the best compression algorithm is used for each browser (Brotli/gzip)

- you could extend cache control headers that are traditionally short even for the most known provider (see GA tag being at 30min)

You could even extend the TTL to one year by including these assets into your cache management strategy (URL hash, versioning, and so on …). We will discuss this point later in the article.

No Service Shutdowns or Outage

Another interesting aspect of self-hosting third-party assets is the mitigation of risks associated with slowdowns and outages. Let’s say that your A/B test solution is very slow and is implemented as a blocking script in the head section, either the page will stay blank or the page will appear after a long timeout. Also, if you use a library from a third-party CDN, you are exposed to break the JS logic of your website if the third-party provider is down or blocked in a country.

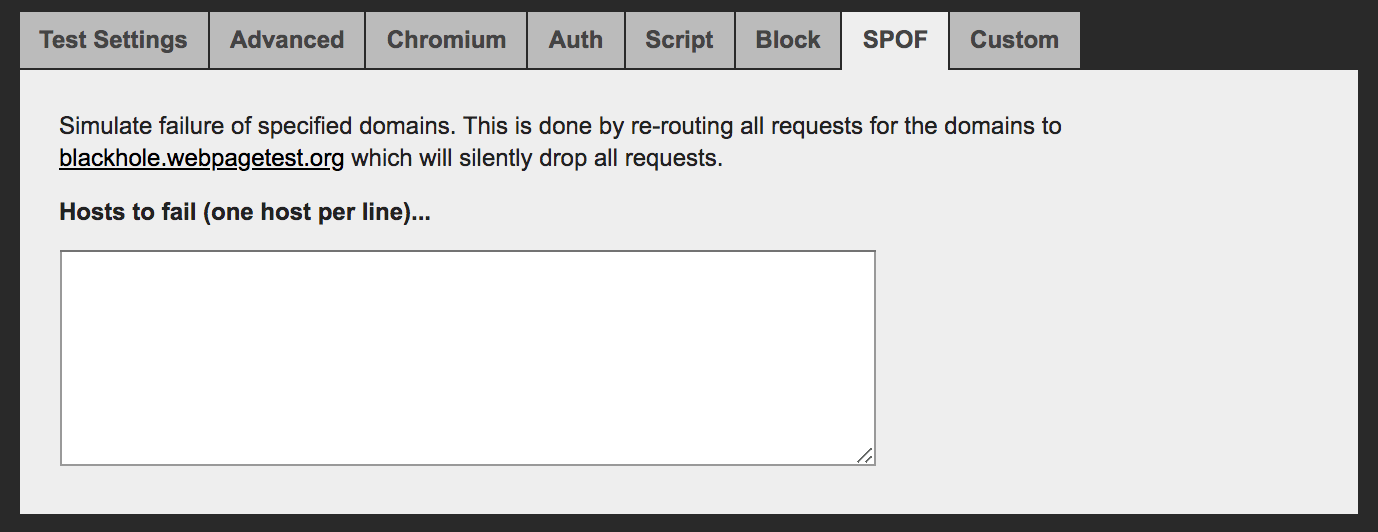

You can have a look at the SPOF section on Webpagetest to verify how your website behaves when an external service is broken:

What about browser caching penalty (spoiler: it is a myth)

You may think that using public CDN will automatically lead to better performance since they have a better network and are distributed but this is actually more complex.

Let’s say we have several different websites: website1.com, website2.com, website3.com. We use jQuery on all of them and include it from CDN like googleapis.com. The expected behavior from a browser would be to cache it once and use it for all other websites. This would reduce hosting costs for sites and improve the performance. Practically speaking, this is not the case. Safari implements a feature called Intelligent Tracking Protection – the cache is double-keyed based on document origin and third-party origin.

This is well explained in this article Safari, Caching and Third-Party Resources from Andy Davies.

Old research by Yahoo, Facebook and more recent research by Paul Calvano demonstrated that resources don’t live for as long as we might expect in the browser’s cache:

There is a significant gap in the 1st vs 3rd party resource age of CSS and web fonts. 95% of first party fonts are older than 1 week compared to 50% of 3rd party fonts which are less than 1 week old! This makes a strong case for self hosting web fonts!

So, no, you won’t notice a performance degradation due to browser caching.

Now, that you have a good view of the pros, let’s see what makes the difference between a good and a bad implementation.

The bad: the devil is in the details

Moving third-party resources on the main domain cannot be done automatically without taking care of the caching aspect of the resource.

One major caveat is the lifecycle of the asset. Third-party script that are versioned like jquery-3.4.1.js won’t cause any caching issue since no change will happen on this file in the future.

But if there is no versioning in place, scripts can go out of date. This can be a large issue as it prevents from getting important security fixes without manually updating or you could even create bugs if there is a unsynchronization between a tag and its backend.

However, caching a resource on the CDN that is often updated (tag managers, A/B test solutions) is harder but not impossible. Services like Commander Act, a tag manager solution, request a webhook when a new version is published. This gives the possibility to trigger a flush on the CDN or better trigger an update in the hash/version of the URL.

Adaptive serving

Also, when we talk about caching, we need to consider the fact that the cache settings on the CDN may not be suitable for a third-party assets. Third-party services may implement UserAgent-sniffing (also called adaptive serving) to serve versions optimized for a given browser. They rely on regular expression or database on User-Agent HTTP header to know the browsers capabilities and serve optimized version.

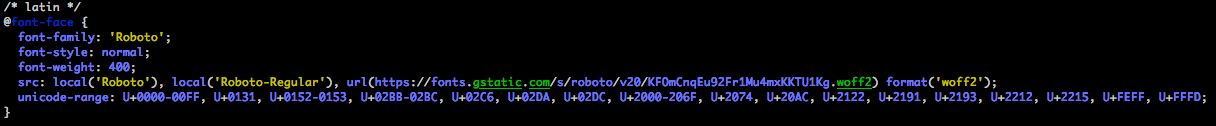

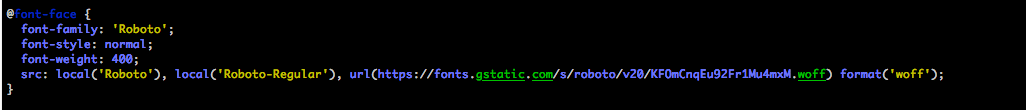

Two cases come to mind: googlefonts.com and polyfill.io. Google Fonts serves at least 5 differents CSS file versions for a resource depending on the UserAgent capability (referencing woff2, unicode-range…).

Google font requested by Chrome

Google font requested by IE10

Polyfill.io returns only the polyfills that are required by the browser for performance reasons.

For example, this request size of https://polyfill.io/v3/polyfill.js?features=default is 34K when loaded by IE10 and empty when loaded by Chrome.

The Ugly: a closing note about privacy concerns

Last but not least, serving third-party services through the main domain or subdomain is not neutral for the user privacy and for your business.

If your CDN is misconfigured, you may end up with sending cookies of your own domain to a third-party service. Your session cookie that are normally not exploitable in Javascript (with the attribute httponly), will be sent to a third-party host if there is no filtering at the CDN level.

It is exactly what can happen with trackers like Eulerian, Criteo… Third-party trackers used to be able to set a unique identifier in cookies that they could read at will on the different websites you visit, as long as this third-party tracker was included by the website.

Now, most browsers now include protections against this practice. So, they now ask to disguise them as first-party trackers behind a random subdomain, with a CNAME to a generic and unbranded domain. This method is called CNAME Cloaking.

While this is considered bad practice for a website to set cookies accessible to all subdomains (i.e., *.website.com), many websites do this. In that case, those cookies are automatically sent to the cloaked third-party tracker – game over.

Also, the same happens with Client-Hints HTTP headers that are only sent to primary domain because they can be used for fingerprint users. Be sure that your CDN will correctly filter those headers as well.

Conclusion

If you want to implement self-hosting tomorrow, my two cents are:

- Self-host your critical JS libraries, Fonts and CSS. This will reduce the risk of SPOF or degraded performance on the critical path.

- Before caching a third-party resource on a CDN, be sure that it is versioned or that you can manage its life cycle by flushing manually or even automatically the CDN when a new version is published

- Double check your CDN/proxy/cache configuration to avoid sending primary cookies, client hints to third-party services.